Promised Data Unavailable? – I’m Sorry, Ma’am, There’s Nothing We Can Do

This blogpost has been written by Michèle Nuijten. Michèle is an assistant professor of our research group who investigates reproducibility and replicability in psychology. Also, she is the developer of the tool “statcheck”. This tool automatically checks whether reported statistical results are internally consistent.

I’ve been working in the field of meta-science for long enough to know that “data are available upon request” should not be taken too seriously.

But I get a lot more enthusiastic if the Data Availability Statement is as specific as this:

“Who can access the data: On acceptance of this manuscript, a password-protected disclosure-controlled version of the trial data will be uploaded to the University of [XXX] repository. Access to this will be embargoed for 12 months from the date of publication to enable the research team to write planned papers.

Types of analyses: Data will be made available for any purpose.”

The study was published in 2023 and by the time we got interested in the data, it was 2025: the embargo had expired, but the study was still recent enough to trust that the repository still existed. Fantastic, let’s go!

Little did we know that this promising statement marked the start of a nine-month long endeavor to actually get our hands on said data. Nine months – and counting. 1

Verifying Claims

In this case, our data request was not even part of one of those obnoxious

2

meta-studies to prove that no one shares their data.

3

No, in this particular case, the request for data was (is!) a part of my PhD student Dennis’ thesis, who studies the evidence base of mindfulness-based intervention. As a part of this, we decided to verify the results of a high-profile experiment with surprising results – a study that was the first to show that mindfulness-based cognitive therapy may be superior to cognitive behavioral therapy in the short term.

The clinical significance of this study in combination with both the surprising outcome (and a few surprising methodological choices) made us want to take a closer look. We would verify the reported outcomes, run a few robustness analyses, and write the whole thing up in a preregistered Verification Report. How wonderful it would be to see scientific self-correction in action!

False Promises

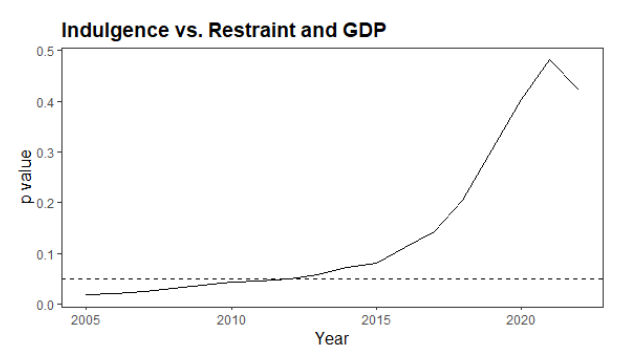

Maybe I should have seen it coming. If I’m being honest, I already knew that even very concrete Data Availability Statements are often void. The first time I observed this at scale, was in our 2017 study on data sharing and statistical errors (Nuijten et al., 2017). We wrote:

“We recorded 134 cases in articles from PLOS journals where data were promised but not available. This is as much as 29.0% of all PLOS articles that promised data. This is in line with the findings of Chambers (2017, p. 86), who found that 25% of a random sample of 50 PLOS papers employing brain-imaging methods stated their data was available, whereas in fact it was not. In FP [Frontiers in Psychology], we found a similar percentage: of the twelve articles that promised data, three articles (25.0%) did not have available data.”

Even after the replication crisis and credibility revolution, this pattern seems to persist. A recent paper reported that almost 70% of clinical trial reports declare data sharing, but only an extremely depressing <1% actually made data openly available (Danchev et al., 2021). Similarly, others found that:

“Among 1792 manuscripts in which the DAS [Data Availability Statement] indicated that authors are willing to share their data, 1669 (93%) authors either did not respond or declined to share their data with us. Among 254 (14%) of 1792 authors who responded to our query for data sharing, only 123 (6.8%) provided the requested data.” - Gabelica et al. (2022)

I guess we can feel a bit better about being ignored, knowing that this is apparently the behavioral standard in the field.

Nullius in Verba

I’m sure you have sensed my frustration by now. Knowing that fruitless data requests often happen (and that it probably wasn’t personal) doesn’t make it better. If anything, it’s making it worse.

My frustration is caused by two things. First, I very much agree with the slogan “Science without open is just anecdote”, 4 the modern version of Nullius in Verba (take nobody’s word for it).

Knowing about the prevalence of statistical errors, the widespread opportunistic use of researcher degrees of freedom, and even the concerning rise of paper mills and AI generated fake articles, it is very hard for me to see any justification not sharing data.

And yes, there are certainly cases where data sharing may be difficult. Privacy, legal restrictions, I get it. But even then, there should always be a way for an independent scientist to verify claims - even if it entails travelling to the authors’ institution and reproducing the results on a desktop disconnected from the internet. We can’t simply end our papers with “trust me, I know what I’m doing”.

The data are the science. We need them for a trustworthy knowledge base.

Data: Missing, Accountability: Missing

A second cause of frustration is the fact that it seems impossible to hold anyone accountable.

After we were ignored for almost six months, we decided to contact the journal that published the study. Since the study was published in the prestigious clinical journal JAMA Psychiatry, we had good hopes that they would take swift action and put pressure on the authors to share the data. After all, it’s the journal that is responsible for its contents, and a demonstrably void Data Availability Statement should be dealt with.

They got back to us fairly quickly (hurray!) to say:

“Thank you for your email. While the JAMA Psychiatry encourages data sharing it does not mandate such sharing. You might send a follow-up to your request with your project proposal to the corresponding author [...]. However, there is nothing the journal can do to mandate approval of your request.”

There is nothing the journal can do? Actually, there is loads the journal could do. They could temporarily retract the paper until the data are shared. They could issue a statement of concern about the data availability. They could publish a correction in which the Data Availability Statement actually states “the data are not available”. If nothing else, they could send the authors a stern email.

Open-Washing

Note that it is irrelevant whether the journal does or does not mandate data sharing. The matter at hand is not whether data should always be shared. The point is that JAMA Psychiatry has a mandatory Data Availability Statement, but does not seem to be concerned if authors use it to make false promises.

So why do journals even bother with mandatory Data Availability Statements, if they do not seem to care what is in them?

A more cynical person than me might say: “because then journals do get the credit for being open, 5 but don’t have to commit any resources to actually enforce it.” Such behavior is sometimes called “open-washing” (analogous to greenwashing).

In the case of JAMA Psychiatry, this attitude is especially surprising, given that the journal is a member of the ICMJE (International Committee of Medical Journal Editors). ICMJE member journals are explicitly committed to encouraging data sharing, and authors are warned that even though data sharing is not mandatory, it could affect editorial decisions. JAMA itself even explicitly stated:

“Although many issues must be addressed for data sharing to become the norm, we remain committed to this goal.” - Taichman et al. (2017)

This seems to be in stark contrast to the “not our problem”-vibe in the email.

Journals: Take Responsibility

But JAMA Psychiatry is not the only journal where Data Availability Statements cannot be taken at face value. A recent paper compares (actual) data sharing in surgical journals before and after they adopted the ICMJE data sharing policy (Bergeat et al., 2022). Before the policy, data were available for 3.1% of RCTs. After the policy, data were available for… 3.1% of RCTs. I have never seen such a beautiful example of a null result.

Now I understand that not every journal can be the next Psychological Science,6 where a dedicated editorial team7 painstakingly verifies every reported number in a manuscript before it gets published.

But I would have hoped that if you would explicitly alert the editorial staff of a high profile journal that an article they published is making false data sharing claims, that at least something would have happened.

Instead, we had to make do with an “I’m sorry, ma’am, there’s nothing we can do.”

Epilogue

We found a promising clause in the policies of the funder of the study that:

“In exceptional circumstances, where data are not shared in accordance with NIHR’s expectations, the matter may be raised with NIHR.”

But then again, statistically speaking, I guess our case is not very exceptional.

Footnotes

1 We have repeatedly emailed the authors in every imaginable combination over a period of nine months, and have been thoroughly ignored.

2 I recognize that we, meta-researchers, may sometimes be a bit annoying. But it’s all for the greater good!

3 Not that it should matter, given that “Data will be made available for any purpose”.

4 You should totally get the sticker: https://zenodo.org/records/3453126

5 I hear it’s all the rage these days.

6 Although, why not?

7 COI alert: including me.

References

Bergeat, D., Lombard, N., Gasmi, A., Le Floch, B., & Naudet, F. (2022). Data sharing and reanalyses among randomized clinical trials published in surgical journals before and after adoption of a data availability and reproducibility policy. JAMA Network Open, 5(6), e2215209. https://doi.org/10.1001/jamanetworkopen.2022.15209

Danchev, V., Min, Y., Borghi, J., Baiocchi, M., & Ioannidis, J. P. A. (2021). Evaluation of data sharing after implementation of the international committee of medical journal editors data sharing statement requirement. JAMA Network Open, 4(1), e2033972. https://doi.org/10.1001/jamanetworkopen.2020.33972

Gabelica, M., Bojčić, R., & Puljak, L. (2022). Many researchers were not compliant with their published data sharing statement: A mixed-methods study. Journal of Clinical Epidemiology, 150, 33–41. https://doi.org/10.1016/j.jclinepi.2022.05.019

Nuijten, M. B., Borghuis, J., Veldkamp, C. L., Dominguez-Alvarez, L., Van Assen, M. A., & Wicherts, J. M. (2017). Journal data sharing policies and statistical reporting inconsistencies in psychology. Collabra: Psychology, 3(1). https://doi.org/10.1525/collabra.102

Taichman, D. B., Sahni, P., Pinborg, A., Peiperl, L., Laine, C., James, A., Hong, S.-T., Haileamlak, A., Gollogly, L., Godlee, F., Frizelle, F. A., Florenzano, F., Drazen, J. M., Bauchner, H., Baethge, C., & Backus, J. (2017). Data sharing statements for clinical trials: A requirement of the international committee of medical journal editors. JAMA, 317(24), 2491–2492. https://doi.org/10.1001/jama.2017.6514